<a href="https://reason.com/2025/07/21/pentagon-awards-up-to-200-million-to-ai-companies-whose-models-are-rife-with-ideological-bias/" target="_blank">View original image source</a>.

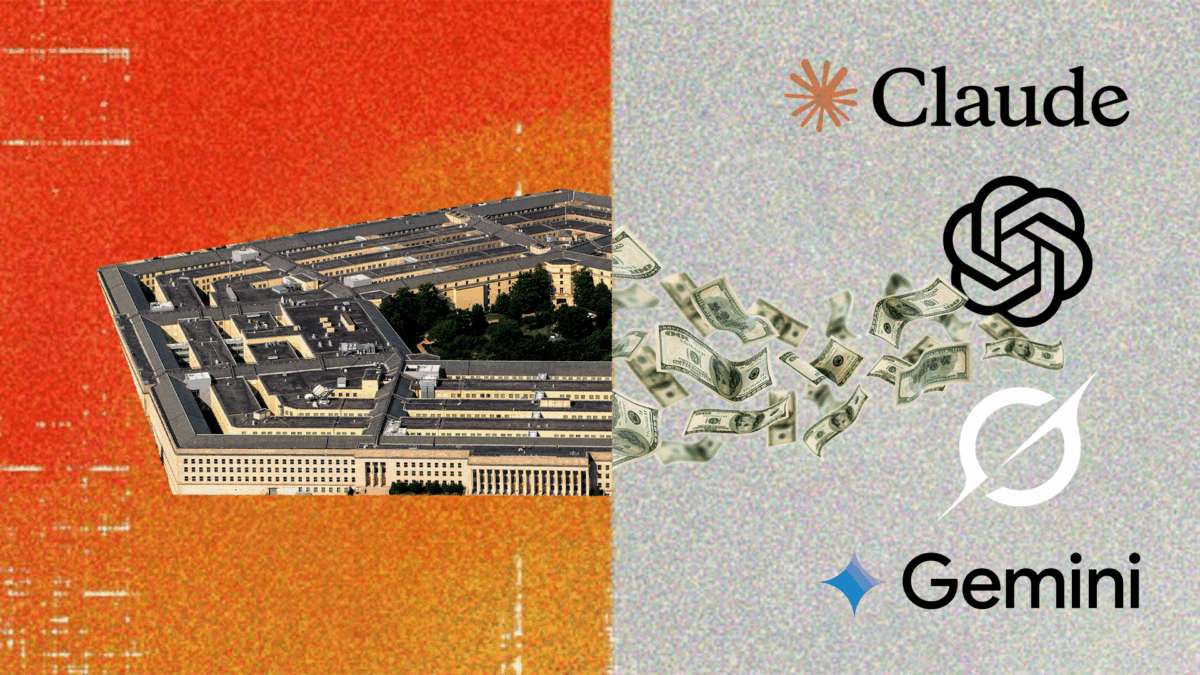

The Pentagon is making a splash in the world of artificial intelligence, awarding up to $200 million each to tech titans like Anthropic, Google, OpenAI, and xAI to create AI workflows aiming at national security. Sounds impressive, doesn’t it? But wait—critics are raising red flags over potential ideological biases embedded in these models. Imagine AI trained with human biases could influence national security decisions. It’s like letting your high school debate team run the country… again.

Take Anthropic’s Claude, for example. Instead of your typical reinforcement learning, it’s based on a constitution that emphasizes principles from the United Nations’ human rights guidelines. It sounds noble and all, but when the U.S. is a capitalist powerhouse, some wonder if these models should actually align with American values. Meanwhile, xAI’s approach—relying on Elon Musk’s guidance—has some questioning if their AI is more about celebrity than substance. Who needs clarity when you’ve got fame, right?

Experts like Matthew Mittelsteadt are worried that the lack of transparency in xAI’s documentation does a disservice to national security. With so much cash on the table, we can’t help but ask: are these AI systems protecting our interests or presenting hidden biases that could lead us down a murky path? As the funding gears up, the tech community watches closely, hoping for the best but fearing the worst. After all, trusting AI to safeguard democracy is one leap of faith that’s akin to bungee jumping without a cord!

So, what do you think? Should we be worried about ideological bias in AI models, or is it a chance worth taking for innovation? The debate is hot, and it’s time to weigh in!

To get daily local headlines delivered to your inbox each morning, sign up for newsletter!